How to...deal with Sampling Datasets

To Sample or Not to Sample?

That is often the question for data preppers. In the world where data volumes are growing, storage solutions are getting cheaper and data creation is easier than ever - understanding when to sample datasets is becoming a key skill in the Data Preparation process.One simple rule... All if possible

The reason why we use data is to find the story, trends and outliers within it so to make better decisions in our everyday and working lives. Therefore, why not aim to use all the data and information you can to do that?Using the full data set is not always possible though. The reason why Preppin' Data exists is because data often needs to be prepared for analysis. Some data is so messy or uncomputable that we have no choice but to remove it. This challenge is not what we mean by sampling though. Sampling is about using a subset of the full data set - not because the data can't be cleaned but for lots of separate reasons.

So why should you sample?

Avoid technical limitations - volume

The world is swimming in data and there is no sign of stopping yet. Many datasets have gone from being possible to be stored in files to impossible due to the volume. Databases are being relied on more to support the volume of data. The good news is that if your data is in a database, someone has at least architected it to be held in that form. The likely data prep challenges here are:- Joining other datasets together - database tables are rarely in the perfect form for you analysis. When working out what is useful for your analysis and what isn’t, taking small samples of the data tables to understand how they can be joined or what data fields you need from each table is important. Samples allow you to work faster by removing long processing times of joining large data sets together (especially if you don’t get the right join condition(s)).

- Determining your ideal structure for analysis - database tables are designed for the storage of data more than they are designed to be perfect for your analysis. Pivoting data, removing unrequired fields like database keys / IDs and filtering to relevant time periods are common techniques that you can apply more quickly using samples rather than having to work with the full dataset.

- Structure - what columns are there, do you need to add more (calculations) or get rid of some?

- Cleanliness - how clean are those data fields? Check whether the dataset has concatenated fields that you need to break apart, clean up strings of text to turn them into meaningful categories or making sure there are no foreign characters slipping in to your measures.

Avoid technical limitations - velocity

Media streaming services and the Internet of Things are just two current areas that face this daily battle. Long gone are the days where an overnight batch run to update key data sources was sufficient. The speed at which data is created by users of modern media services and digital platforms means that data preparation can be forever a moving beast. By using samples of this data, you are likely to avoid many of the pitfalls of trying to use all the data, all the time. A sample will allow you to take the structure you need to prepare and freeze it in time to deal with the two main challenges in data prep:Using a sample of the data, as more data floods in, works as the logic you create, can then be applied to that live stream by removing the restriction that is creating that sample.

Sample to reduce build times (slow connections)

My early career as many others are sat within large corporate companies. Whilst fantastic opportunities can come from this, so will a serious amount of frustration with slow computers, servers and connections between them. In one institution I used to use two computers to allow one to run queries whilst I was building the next set of analytical queries on the other. My love of coffee comes when I used to have both machines running queries at the same time so would grab a coffee instead. Using samples to enable me to set up the data structure and analysis was key to keeping my pace of delivery high and caffeine levels lower. When I had the queries structured, joins checked and relevant filters in place, then I had to wait for the full dataset to run but I did so in confidence knowing I had done everything I could to just do it once.

Sample to determine what you need

Another use of sampling is because you don’t actually know what you need. In the Plan Your Prep section, we advocated the use of sketching out what you were likely to need to complete your analysis. However, sometimes the only way to iterate that need is to try and start to form that analysis. Multiple iterations of Data Preparation is needed as much as multiple iterations of your analysis as people learn and ask more. Samples of data can start to let you get the feel of what additional changes you want to make. Writing out an output that takes a long time to form is only an issue if you have to do it time and time again.

Sampling techniques

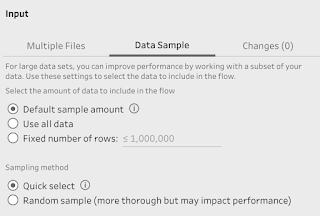

Tableau Prep is actually based on using samples during the formation of your Data Preparation. Through the clever use of algorithms, Prep will automatically begin to allow you to see the full shape of your data, categories present and distribution of values without having to run the full data set through each step until you are ready to form the final output where Tableau will then use the full input data set unless instructed otherwise. If you have wider datasets (more columns), Tableau's default sampling will bring back fewer rows / records of data than if you use thinner (fewer columns) data sets.

Even though Prep is sampling the data, you might want to control the sample yourself. There are two basic controls for this within the Input Step:

Fixed number of rows (the top of the table)

A randomised sample (less efficient to run)