2019: Week 25 When PD met Workout Wednesday

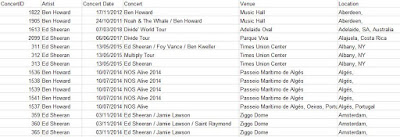

When Lorna suggested we set-up a challenge for Tableau users to not only complete a Workout Wedesday live but use Tableau Prep Builder to prepare their data then we jumped at the chance to collaborate. This week is held as a live session (I'm waving if you are in the room and if you're not here, you're welcome to take part too) so we have built a combined challenge that should only take a few hours in total even if you new to either tool. So what's the challenge? To celebrate Tableau's Music month Lorna found a great data source on one of her favourite artists (Ed Sheeran) that led me to ask a question about one of my faves (Ben Howard). We want to analyse the two artists careers based on their touring patterns and as two UK-based singer-songwriters who appeared on the UK music scene at similar times, how have they developed. The Preppin' Data part We have taken the gig history from concertarchives.org and done a little pre-cleaning as we wanted the ch...