2019: Week 42

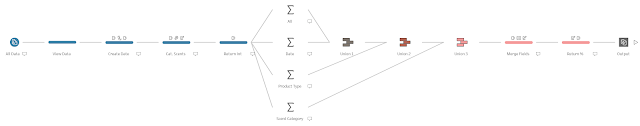

In Week 26's challenge , we took the opportunity to use only mouse clicks in Prep to see how far we could prepare some data for Chin & Beard Suds Co. This time, the challenge has more elements as the Tableau Prep development keeping adding wonderful features. Things you might want to look out for this week to help: - Data Roles: This 'How to...' post will be useful if you haven't used them before - Prep's recommendation icon This week's challenge involves helping Chin & Beard Suds Co. go global. We are looking to expand our stores' footprint overseas and therefore need to look at potential costs and returns from those potential sites. An agency has done lots of the work for us but they aren't the best at data entry. They've sent a messy file that they haven't had time to clean up so any inaccurate data should be removed. Don't worry folks, we won't be using them again! Requirements Sheet 1 - The Main Data Set Cu...