2019: Week 42 Solution

This week we focused on the functionality within Tableau Prep by setting a ‘clicks only’ challenge, where no calculated fields would be allowed to be typed out. Within this solution we find out where the best locations would be for Chin & Beard Suds Co when they expand overseas.

The first part of the task was to split out various fields within the data. First we are going to use Prep’s Split functionality to break the location into two fields, City and Country. To do this we use the Customer Split, then split all values using a comma as the separator:

The next split that is needed is both the Potential Sales and Store Cost fields. Both of these contain the figures and also the currency code, but we want these split out into separate columns. Therefore, we use the custom split functionality again, but this time we split all values out using a single space:

After splitting these values, we need to do some tidying up of unwanted fields and renaming the newly split fields so that they make more sense. For example renaming the City, Country, Currency, Store Cost, and Potential Sales fields.

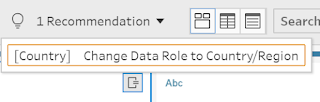

We can also use Prep’s functionality to set the data roles of some of the fields. We have some geographic based fields, therefore we can set these so that Tableau recognises them correctly. This functionality can be set on City, Country, and Zip Code:

We can also use Prep’s recommendation to change these data roles, as Tableau Prep will automatically identify that these are geographical fields, and then present some suggestions as to what it thinks the correct data role should be.

After updating the data roles, you’ll notice that some of the countries and cities have been input incorrectly. As a result of this will exclude them from our data set, by clicking on the values that we want to remove then pressing the ‘Exclude’ button:

Once we are happy that we have cleaned the initial data set, we can now join the Currency Conversion table. Using an inner join on Currency, this will bring both of the tables together into one data set, and then we can remove any duplicated fields.

The next step is to remove any instances where the Store Cost is higher than Potential Store Sales. Normally we could do this by typing out a condition within the filter, however we aren’t allowed to use that functionality this week. Therefore, as a result we can use a self join to overcome this problem.

First we will need to create an extra step to duplicate the data, we can then join both of these steps to one another using the following inner join conditions:

This technique is acting like a filter, as the inner join is removing any fields where the Store Cost is less than or equal to the Store Potential Sales. Within the next step we can remove any unwanted or duplicated fields that have been created as a result of the join.

Our next task is to identify the highest performing store within each Zip Code. To do this we use the aggregation tool, to group by Zip Code and then return the Max potential sales within each Zip Code.

Using the newly created Max Sales field, joining this back to the original data will again act as a filter, as it will only retain the fields where the Zip Codes and Potential Sales are equal within both tables.

As a final step we can remove any duplicated fields, and use an Output tool to allow the user the output the data to a location of their choice.