How to... Output to a Database

When Tableau Prep originally was released, the tool was designed to prepare data for Tableau. By cleaning and manipulating data easily and effectively, Prep solved issues for more than just data to be used in Tableau. Therefore, the demand grew for the ability to create, not just Tableau Extracts or CSV files, but writing back to the original sources of much of the information - databases. This was a significant departure for Tableau as Desktop had always been a read only tool, so allowing Prep to change data permanently opened up opportunities, as well as risks.

This post will cover when you should look to write back to the database, how to write back to database and what to watch out for when doing so.

When selecting 'Write to a database table', an error symbol will appear to demonstrate the connection has not been completely set-up. To set-up the connection, you will first need to select a server to connect too. It is the server where the database is located on that you should select.

After selecting which type of database connection will be made, a prompt appears to set-up the connection. Here the server address, or IP address, can be entered in the Server box. An optional database can be set within this connection set-up but isn't essential and will be prompted in the next step if not. Depending on the authentication requirements of the database, either Windows Authentication can be used or a standard username and password that has been set-up on the database.

This post will cover when you should look to write back to the database, how to write back to database and what to watch out for when doing so.

When should you write to a Database?

As we've seen in all the other 'How to...' posts, messy and multiple datasets can take a lot of time to prepare. If you have taken the time and effort to make the data suitable for analysis, then it is likely you should make it available to others, or at publish it back to a source where no-one else will need to battle it once more. Here are some of the common situations you will find when thinking about publishing to a database:

Cleaned Up Data

Dirty data is the reason we have to battle with data in the first place. If you have cleaned the data once, why not load it back to where you sourced it from? Obviously care needs to be taken to not remove data that would be useful for others, or filtering out data that may still be needed by others. If the dataset is clean and ready for analysis and none of the downsides are met, plus you'll need to have write / overwrite permissions on the database, but writing the clean data back can prevent future rework.

If the data is sourced from a system load, the flow may need to be refreshed on a regular schedule by you or Prep Conductor to prevent dirty data being pushed back into the now clean table as new records get added. This situation can also be handled by working with your data teams to show them the manipulations you have made and why as this can probably be built into the normal load process.

Simplify Complicated Joins

Joining tables together can create very useful datasets for analysis. However, the set-up of these joins can be complex through a combination of Join Types and Join Conditions. Therefore, if you have used Tableau Prep to Join datasets together, outputting the resulting dataset can either provide others with the benefits, or save them from having to form those datasets themselves. This will avoid mistakes and rework, making the analysis much easier for users of those datasets.

Building Staging / Reference Tables

Tableau Prep can be used to add dataset to the database to help make analysis easier and more detailed. Staging tables are used where messy, unstructured data is loaded into a database and is worked through various stages of improvement to the point of it being used as a production table. Prep can help with the processing of these sources to determine the manipulation and cleaning that is required.

Reference tables can be used to replace numeric values that are easier to store and faster to process than holding longer string data values. Rather than repeatedly holding the long string values, you should replace those values by a number instead. Prep can be used to form these tables using the row_number() function and an aggregation step.

Cleaned Up Data

Dirty data is the reason we have to battle with data in the first place. If you have cleaned the data once, why not load it back to where you sourced it from? Obviously care needs to be taken to not remove data that would be useful for others, or filtering out data that may still be needed by others. If the dataset is clean and ready for analysis and none of the downsides are met, plus you'll need to have write / overwrite permissions on the database, but writing the clean data back can prevent future rework.

If the data is sourced from a system load, the flow may need to be refreshed on a regular schedule by you or Prep Conductor to prevent dirty data being pushed back into the now clean table as new records get added. This situation can also be handled by working with your data teams to show them the manipulations you have made and why as this can probably be built into the normal load process.

Simplify Complicated Joins

Joining tables together can create very useful datasets for analysis. However, the set-up of these joins can be complex through a combination of Join Types and Join Conditions. Therefore, if you have used Tableau Prep to Join datasets together, outputting the resulting dataset can either provide others with the benefits, or save them from having to form those datasets themselves. This will avoid mistakes and rework, making the analysis much easier for users of those datasets.

Building Staging / Reference Tables

Tableau Prep can be used to add dataset to the database to help make analysis easier and more detailed. Staging tables are used where messy, unstructured data is loaded into a database and is worked through various stages of improvement to the point of it being used as a production table. Prep can help with the processing of these sources to determine the manipulation and cleaning that is required.

Reference tables can be used to replace numeric values that are easier to store and faster to process than holding longer string data values. Rather than repeatedly holding the long string values, you should replace those values by a number instead. Prep can be used to form these tables using the row_number() function and an aggregation step.

How to set up Write to Database

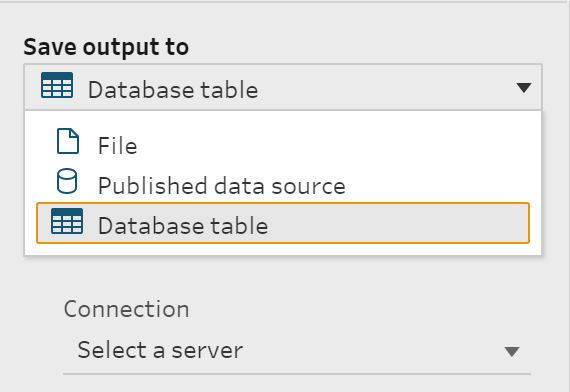

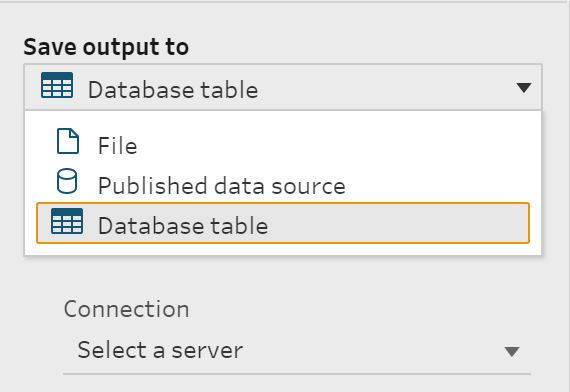

No matter what type of output you are making to a database, the early set-up stages are similar. Firstly, select the output as a database table.

When selecting 'Write to a database table', an error symbol will appear to demonstrate the connection has not been completely set-up. To set-up the connection, you will first need to select a server to connect too. It is the server where the database is located on that you should select.

When selecting the server, you will be prompted for which software the database is running on. For this example, I am using Microsoft SQL Server.

The next part of the set-up determines what Database the table being created, or edited, will reside.

If the table does not already exist, it is possible to add the table name in the Table section of the configuration.

The final part of the set-up is to pick what type of write / edit you want to make. These options are discussed in more detail below:

Create Table

By creating a new table, you are writing a new table that has previously not existed in the database. Databases often have naming conventions based on how they are used within the organisation. New tables should meet those naming conventions so they fit and are more likely to get used within the organisation. Any spaces in the database name will be replaced with an underscore as most databases refuse to hold columns with spaces within the names.

Append to Table

By selecting 'add data to table', Prep will add data to the end of an existing table or create a table if it doesn't exist. This option behaves as an incremental update to the table by adding the rows being processed within the flow, on to the end of the original table.

By creating a new table, you are writing a new table that has previously not existed in the database. Databases often have naming conventions based on how they are used within the organisation. New tables should meet those naming conventions so they fit and are more likely to get used within the organisation. Any spaces in the database name will be replaced with an underscore as most databases refuse to hold columns with spaces within the names.

Append to Table

By selecting 'add data to table', Prep will add data to the end of an existing table or create a table if it doesn't exist. This option behaves as an incremental update to the table by adding the rows being processed within the flow, on to the end of the original table.

If you add an additional column as part of the flow, this will be added to the dataset for the rows added.

Replace Data

By selecting the 'Replace data in table', the dataset in the table will be overwritten and fully refreshed with the dataset processed by the flow.

What to watch out for?

The most significant challenge with the ability to write to a database is overwriting tables incorrectly. Like any work with data, you have responsibility for your actions and understanding the impact you could have. Permissions on the database are likely to restrict what you are able to do until you have demonstrated the skills, understanding and capabilities to be granted permissions to the tables and data that would have bigger, potentially operational impacts, if incorrectly used or altered.

Most organisations control many tools and permissions to ensure 'production' data sources would not be affected until the changes had been tested in a number of test environments first. Therefore, the biggest risk is actually probably the least risky as there will be tighter controls around it.

Giving Prep Users 'sandpit' space to play and learn is going to be key to enable them to learn and develop the skills to be able to utilise the ability to write database tables. As covered earlier in the post, there are many benefits to being able to write to a database, in order to receive those benefits, you will need to enable the users with the ability to do so. The sandpit space is the ability to write database tables in a space where they won't be:

- Seen as production tables and used accidentally by others

- Won't have any operational importance for the organisation so can be altered and removed without affecting the organisation.

Overall, the ability to write to a database is a strong capability to add to the data preparation process. There are risks of overwriting data that may not be recoverable but these are countered by the permissions set on the database. Using Prep Builder to write to a database, and regularly scheduled through Prep Conductor to continually update, the solutions created in Prep can be productionalised to deliver clean, refreshed data to end-users.